Promptable 3D Is Here: Why AIGC Content Operations Are About to Go Spatial

It is Monday morning, and your eCommerce lead is already in a negotiation.

A marketplace wants richer product views. Retail asks for “view in room.” Social wants short videos with the product rotating, floating, and landing in context. The brand team wants it to be premium, consistent, and local.

None of that is unreasonable.

What is unreasonable is trying to deliver it with a pipeline built for hero assets.

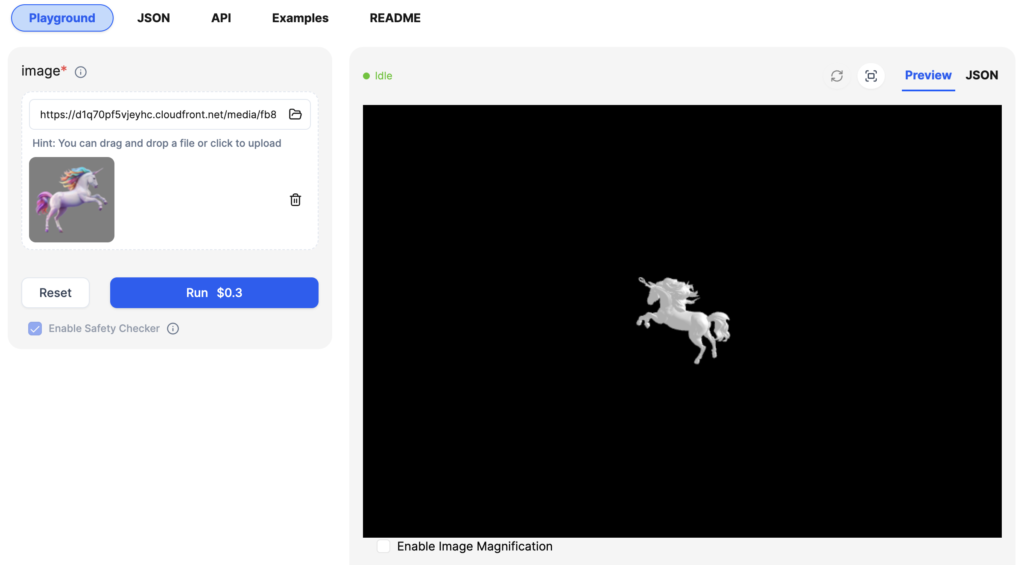

This is why promptable 3D matters. Not because it looks cool, but because it changes the unit of production.

When 3D becomes easier to generate and easier to edit, it stops being “the CGI job.” It becomes a repeatable input to everyday content.

3D Is Becoming The New Master Asset

Most teams still work with flat assets.

You produce a packshot. Then you produce a lifestyle image. Then you cut a video. Then you adapt for six markets and twelve platforms. Each step creates more rework.

Promptable 3D flips that logic.

Instead of starting with an image, you start with an object.

If the object is accurate and controllable, everything downstream becomes lighter:

- Any angle becomes available without reshoots

- Lighting and environments become variables, not new productions

- Short video becomes templated camera moves, not fragile frame generation

- AR and retail experiences stop being separate projects

The best analogy is the move from static design files to design systems.

A design system is not “more design.” It is the rules that let design scale without breaking. A good 3D master asset works the same way. It is a product system for visuals.

From Tools To Systems

A lot of AIGC adoption has been tool-first.

Generate an image. Clean the background. Expand the canvas. Make five variants. Ship.

That works until volume hits.

Promptable 3D pushes teams toward system-first thinking.

The strategic question is no longer, “How do we make this one asset?”

It becomes, “What is the reusable asset we can deploy across touchpoints?”

That shift sounds subtle. It is not.

It changes budgets, timelines, roles, and how you brief creative work.

What Gets Better First

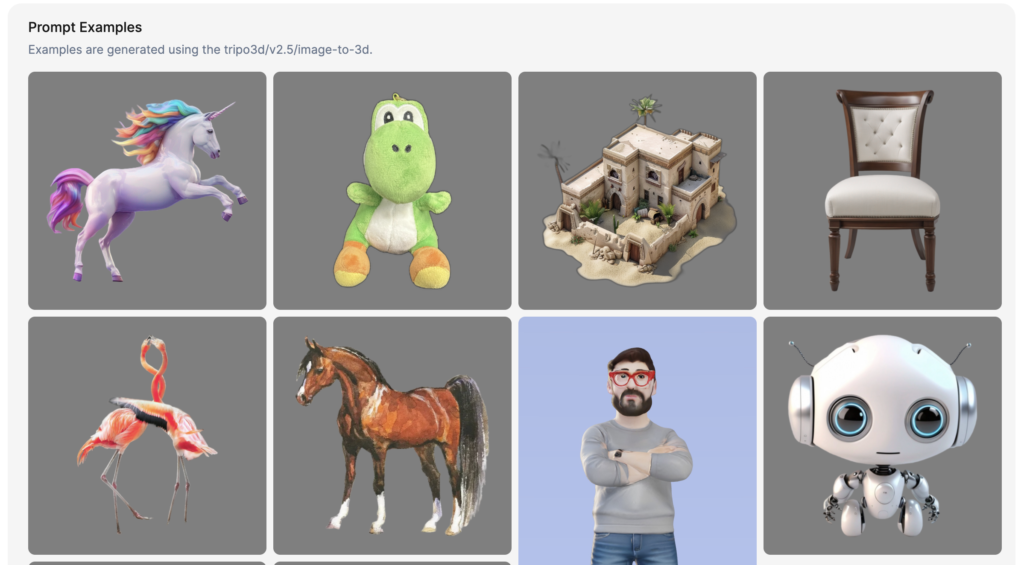

Not every category benefits equally on day one.

The early wins show up where two things are true.

You need consistency, and you need variations.

That is why we expect faster adoption in:

- Home and living (scale, room context, layout)

- Beauty devices and appliances (materials, surfaces, repeatable angles)

- Consumer electronics (feature moments, ports, interfaces, light behavior)

- Fashion accessories (hardware details, stitching, logo control)

These categories already rely on heavy adaptation. They already fight approval cycles. They are already punished by inconsistency.

3D master assets reduce that pain by anchoring the product truth.

Creative Intelligence Becomes The Bottleneck

Here is the uncomfortable part.

As 3D becomes easier to generate, access stops being the differentiator. Direction becomes the differentiator.

We saw this with AI images.

The internet is full of AI visuals. Very little of it is usable for a brand. Not because the model is weak, but because the output is not governed.

The same will happen with 3D.

The failure modes are predictable:

- The model is almost right, which is worse than obviously wrong

- Materials drift (gloss becomes matte, metal becomes plastic)

- Logos warp, proportions shift, and packaging details disappear

- Teams generate volume, but approvals collapse under confusion

That is not a tech problem. It is a production design problem.

Creative intelligence is what makes promptable 3D scalable. In practice, it looks like:

- clear rules for what must never change (logo, proportions, key materials)

- controlled flexibility for what can change (environment, props, lighting mood)

- prompt libraries and reference sets built from approved brand assets

- QA that checks product truth, not just aesthetic quality

- an approval loop designed for throughput, not perfection theatre

This is where “AI native, human-led” stops being a slogan.

The model can generate. Humans define what “on brand” means, then build a system that reproduces it at volume.

Where Agents Actually Help

Most leaders hear “agents” and think software hype. Ignore the word and focus on the job.

Spatial production has repeatable steps. Repeatable steps are where agent workflows work.

Examples of what you can systematise:

- consistent object extraction across a SKU set

- applying scene rules at scale (camera angles, lighting directions, shadows)

- generating format variants automatically (marketplace, social, retail screen)

- flagging likely issues before human review (logo distortion, missing details)

- routing only the right assets into human review, not everything

The goal is not to replace creatives. It is reducing waste.

You want humans spending time on taste, storytelling, and brand truth, not on repetitive formatting and triage.

A Playbook That Works In The Real World

If you lead content today, the smartest move is not building a 3D team.

It is running a pilot that looks like real production.

Pick one lane with clear demand, high volume, and measurable outcomes. Then build the system around it.

Do this:

- Start with one category and a tight SKU set

- Define truth checks (logo, proportions, materials, key claims)

- Build a reference library (approved angles, lighting, contexts, do not list)

- Lock outputs upfront (PDP pack, short video templates, retailer variants)

- Measure cycle time, approvals, revisions, and deployment speed

Avoid this:

- Starting with your hardest product

- Judging success on one hero’s output

- Letting the workflow become tool-led instead of brief-led

- Scaling before QA and governance exist

The Decision In The Next 12 To 24 Months

Content volume is no longer a strategy. It is the baseline.

The strategy is building production systems that generate variation without losing brand truth.

Promptable 3D accelerates that shift because it turns the product into a reusable source, not a one-off image.

The winners will not be the teams that found a cool 3D demo first.

They will be the teams that treated 3D like content operations, built creative intelligence around it, and made it shippable at scale.