Turning AI Video Up to 10: How AI Video Learns to Sound Alive

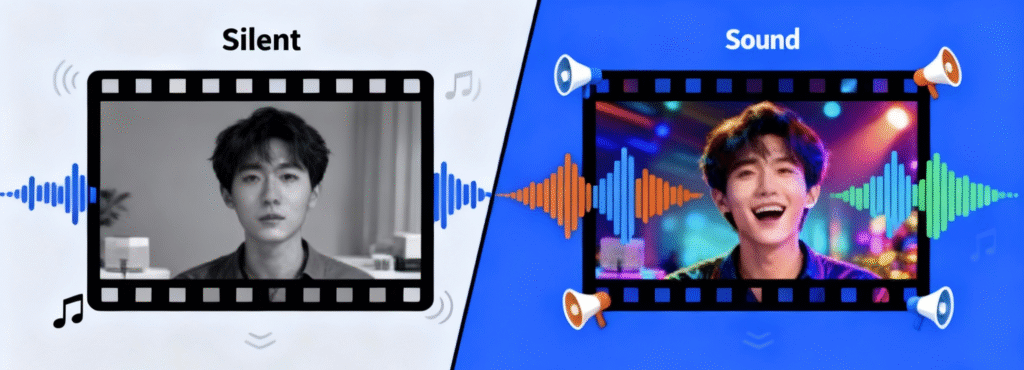

AI video has been racing ahead on the visual side. But most AIGC films still feel like watching a beautiful spot on mute: stunning frames, thin or generic sound.That gap matters. In traditional production, Foley artists rebuild the tiny sounds that make a scene feel real: fabric brushing, glasses touching, shoes on different surfaces. It is slow, expert work. Until now, it has also been one of the hardest parts for AI to emulate.

Tencent’s Hunyuan lab has just moved that frontier. Their model, HunyuanVideo-Foley, can “listen” to a video and generate high quality, in sync sound that follows the action on screen. For anyone working with AIGC, it quietly unlocks a new layer of creative control.

At HubStudio, we see this as a step change. We can now treat AI video as a full sensory format, not just moving images sitting on top of a music bed.

What Hunyuan Video-Foley Actually Fixes

Earlier video-to-audio models suffered from a simple bias. They paid more attention to the text prompt than to the video itself.

If you wrote “ocean waves”, you mostly got waves, even if the scene clearly showed footsteps in the sand and birds overhead. Technically correct, emotionally flat.

The Hunyuan team tackled this from three angles.

- Better training data

They built a roughly 100,000-hour dataset combining video, sound, and text, then filtered out clips with long silences or crushed audio so the model learned from clean, varied material.

- Visual first attention

The architecture first locks timing between picture and sound, frame by frame, then uses the text to shape mood and context. That stops prompts from drowning out what is really happening on screen.

- Quality guidance built in

A technique called representation alignment constantly compares the model’s output to a high-grade audio reference, nudging it toward richer, more stable sound instead of noisy effects.

In tests, human listeners consistently rated HunyuanVideo-Foley’s audio as more realistic and better synced than other models. For creators, that means we can now generate believable Foley tracks from AI or live action video at production speed.

Why This Matters For Brands

Once sound is as generative as visuals, three things shift immediately.

The content feels premium, not prototype

Viewers subconsciously judge quality through audio. Wrong reverbs, missing micro sounds, or late impacts trigger “cheap” faster than any visual artifact. When sound and action line up, a six-second shoppable clip suddenly feels like a finished piece, not a test.

Sonic memory enters the optimisation loop

Branding is often visual first. But sound is what sticks: a specific pour, a click, a subtle cue before a logo. With generative Foley, we can create and test multiple sonic versions of the same asset and see which combinations drive better attention, recall, and share behaviour.

Scale no longer means sameness

Right now, many AI videos share the same royalty-free tracks. With video-to-audio models, we can produce context-tuned soundscapes at scale.

- Morning versus late-night versions of the same film

- Different city ambience for one product story

- Everyday versus festival variants, using local cues

The asset count stays high, but the world inside each film feels specific instead of generic.

New Creative Passes We Can Run

At HubStudio, we are building this capability into our AIGC pipeline in three ways.

1. Sound passes for AI spots

For concept films, e-commerce loops, and social assets built with AIGC, we can now:

- Take silent or music-only cuts and add scene-accurate sound in minutes

- Version audio by platform: lighter on Xiaohongshu, more punch on Douyin, more restrained for brand dotcom

- Prototype sonic branding elements directly inside finished scenes, not just as standalone stings

- Think of it as sound grading. An extra pass that takes a piece from “good AI test” to “usable creative”.

2. Localised audio without reshoots

Localization has moved beyond faces, language, and props. With generative audio, we can:

- Swap in city-specific ambiences while keeping the same visual master

- Reflect regional rituals and textures in sound, from street markets to fireworks

- Test how different sound worlds change perceived price point, mood, or category fit

- For brands operating across China and beyond, audio becomes part of the transcreation toolbox, not a fixed layer.

3. Building sonic systems, not one-offs

Because we can generate many variations, we can start thinking in systems.

- Define a sonic palette for your brand: recurring textures, patterns, and spaces

- Encode that into prompt packs and negative prompts so every new asset starts in the right sound world

- Optimise against performance metrics where audio is a variable, then fold learnings back into your creative OS

The output is not random AI sound, but a recognisable sonic signature that emerges across hundreds of assets.

How HubStudio Plugs This Into Your Ecosystem

Our belief is simple. AI should amplify creative intent, not replace it.

With tools like HunyuanVideo-Foley, we start from the idea, story, and platform, then:

- Decide where generative audio adds value and where human sound design still leads

- Translate your brand guidelines into sonic rules so experiments stay on brand

- Use performance data to refine both visual and audio generation together

Audio was the missing piece, keeping many AIGC videos in “experiment” territory. With lifelike, in-sync sound now possible at scale, we can move from silent prototypes to immersive, shippable stories at the speed your campaigns demand.